Datadog Synthetic Monitoring Best Practices: NoBS’ Guide for SREs

What Is Datadog Synthetic Monitoring? (And Why SREs Care)

Datadog Synthetic Tests are a powerful tool that allow running scheduled tests of many types against your sites and applications. In general, they are used as “black box” tests from external locations to mimic a customer's behavior and help to inform you if your applications are working as you would expect them to from the outside world. These tests can help relieve the “it works on my machine” or “it works over VPN” type statements when it comes to customer reports of issues. Now to completely contradict that last point, you can also run Datadog provided test runner containers in your infrastructure, so you still have the ability to test private endpoints or internal portals and the like.

They come in three flavors; API tests, Multistep API tests, Browser tests, and Mobile Application Tests.

At a first glance, API tests seem to be pretty narrowly scoped - what if you want to do more than check an API? Well, good news! This category actually contains tests for HTTP, gRPC, SSL, DNS, WebSocket, TCP, UDP, and ICMP.

Multistep API tests allow you to run multiple requests to various endpoints to get data, send it to other endpoints, and validate a full chain of response across your architecture to ensure a full data flow is working.

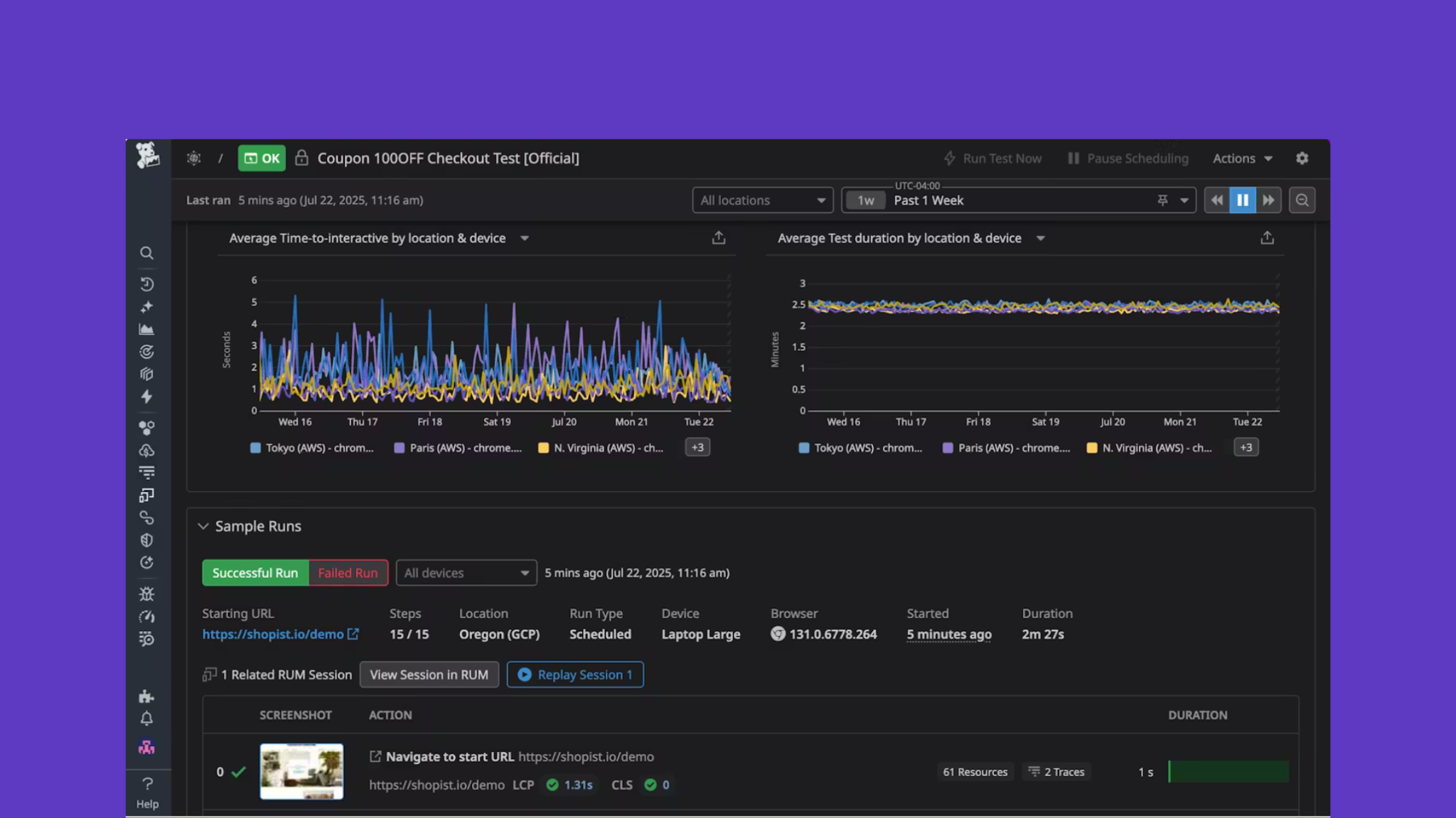

A Browser test allows you to record steps through a web application that Datadog will then run to show if your site is working as expected, if content loads appropriately, and so on. As an added benefit, if you’re not using RUM on your site, these tests can inject it to provide you with even more data!

Mobile Application tests are pretty fancy too - these allow you to upload your Android or iOS application and then run tests for business flows or user interactions on various types of real devices.

Now, without re-writing the rest of the Synthetic Monitoring documentation, let’s get into some of the parts that users can struggle with.

Best Practices for Choosing Datadog Synthetic Test Locations

Datadog provides over 25 globally distributed public locations to run your tests from with these locations hosted in AWS, Azure, and GCP in addition to regional distribution. By default, all locations will be selected, but this behavior can be changed in the Synthetics Settings (and it should be!)

This is a common pitfall; each run from a location counts as an individual test run. So if you create one test that executes every minute from the (currently available) 29 locations, the monthly test run for this one test becomes 29 (locations) * 60 (test runs per hour) * 24 (hours in a day) * 30 (days in a month). Given that tests are billed on runs over the course of the month, even one test configured in this way can wind up being a significant cost.

That aside - selecting which locations to utilize is largely based on what the test is going to be doing. If you’re running an SSL check to determine when your certificate will expire, do you really need that to run from multiple locations? Probably not. If you are a US based company only serving US customers, do you need to execute tests from Europe and Asia Pacific? Also probably not. A general recommendation for this situation is to have an east, central, and west testing location to have a full spread of coverage for your site. If you are also serving other regions, pull those in as well!

To the earlier point of ‘these tests can help relieve the “it works on my machine” or “it works over VPN” type statements…’, if you have an application of considerable importance, you may want to use external AND internal tests to help inform those statements, and understand when there is an internal vs external vs full failure issue.

How to Set Datadog Synthetic Test Frequency Without Blowing Budget or SLOs

The answer here is everyone's favorite - it depends! What exactly are you trying to monitor, and what is the purpose of the test? Using the SSL test example again, do you need to run this every minute if the expiration time is in days? Absolutely not. You’re better off running this once a day at the most frequent, but once a week is likely sufficient. If your test is to make sure that your site is up and responding every minute of every day, you may want to consider a 30 second or 1 minute test interval.

Something else that comes up frequently in this part of the discussion is “what if i need a simple check for a 200 response in less than 1 second more frequently than 1 minute, but synthetics become cost prohibitive?” - there is another option here that is often overlooked. The HTTP Agent Integration runs at the check interval of the agent (which is 15s, by default) and allows for various request methods, authentication, payload, content matching, and so on. While this data is included at no extra cost from the agent, the tradeoff here is that these will only run internally.

Using Datadog Synthetic Tests Effectively: Alerts, Dashboards, and SLOs

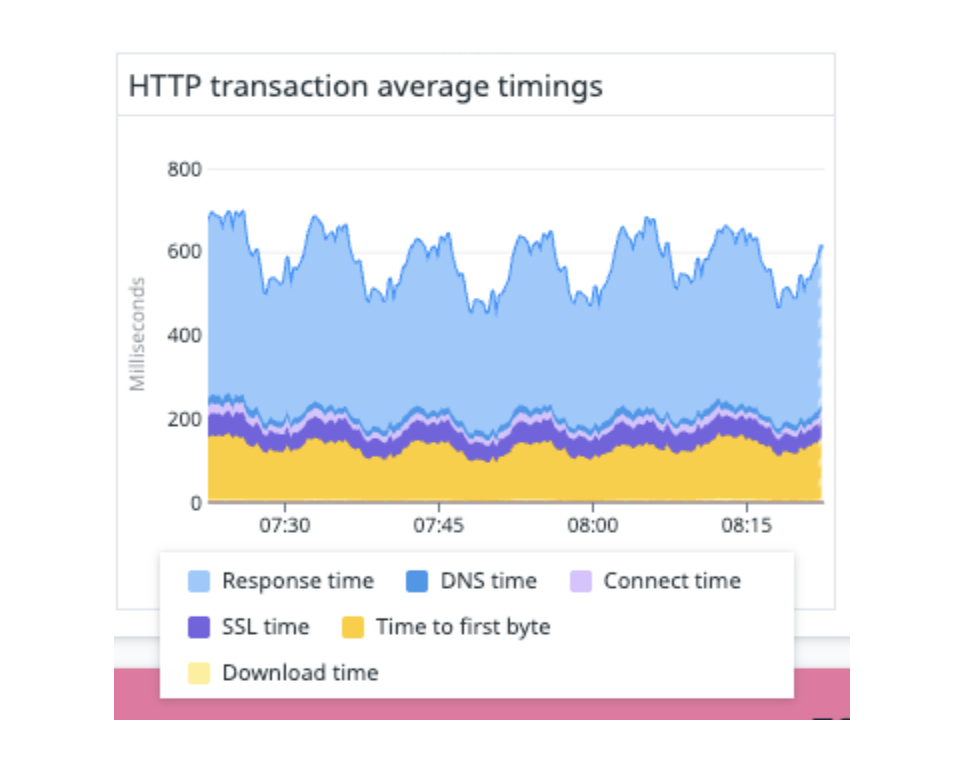

Besides the flexibility of the tests themselves, there are a number of ways to utilize the data and the fact that they are running. First and foremost, when creating a Synthetic test, they always create alerts, and you can create the alert body and notifications at the same time you create the test. If you’re testing for your applications health, it’s an obvious move to do this right off the bat. Secondly, all tests produce various metrics, and these are reflected on the various out of the box dashboards provided by Datadog when tests are created. For example, with an API HTTP test, you can break the various aspects of a request to determine where the majority of time was spent.

Browser tests produce common lighthouse metrics like LCP and CLS, as well as timing by individual steps.

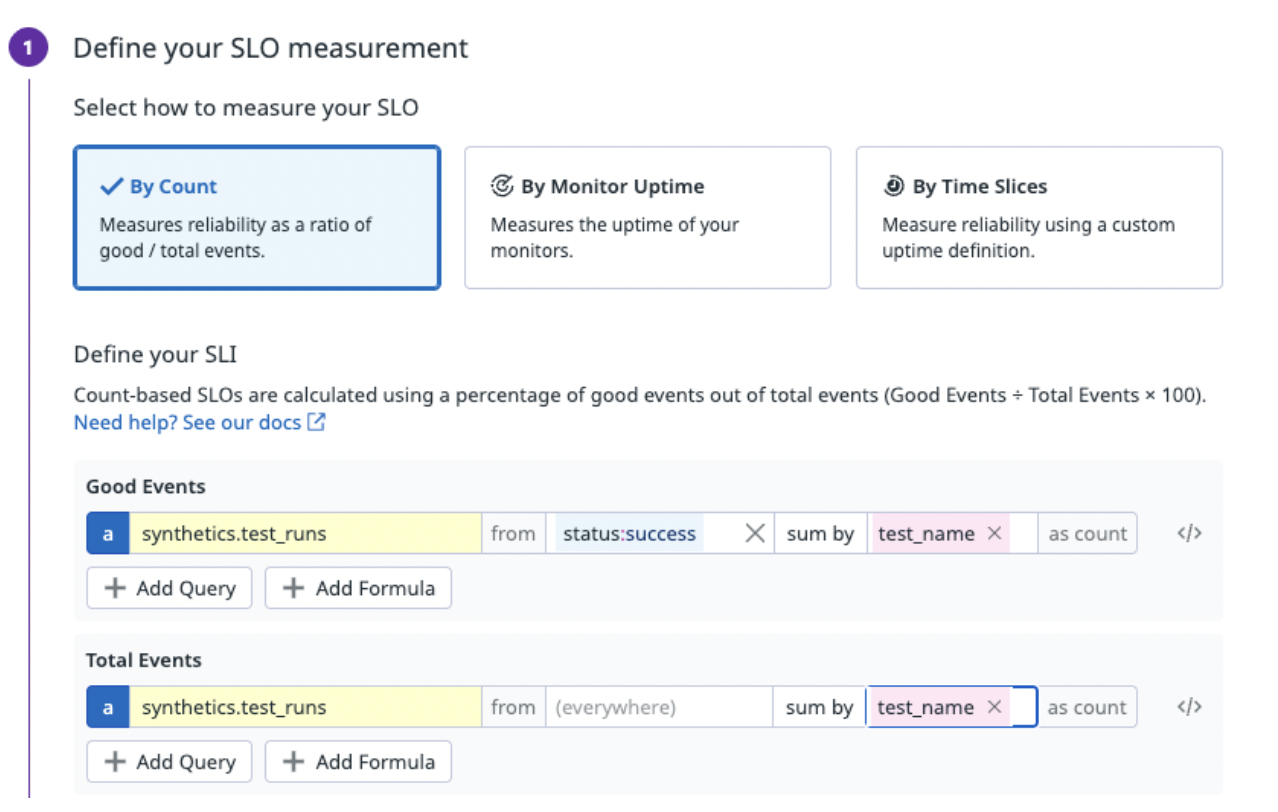

Lastly, you can utilize these tests to create SLOs in two different ways. The first method would be to create a count based SLO for successful tests, like so:

This allows you to determine your successful test rate, broken down by test. The other method would be to create a Monitor based SLO, using the monitor automatically created by the synthetic test. There’s a caveat here though - if your test runs every 5 minutes (for example) and a test fails and then succeeds on the next run, your SLO will have been affected for 5 minutes inherently, even if your site was only down for one minute. If your SLO was set to 99.99% for 30 days, this one failure has already exhausted your error budget, and potentially incorrectly.